[置顶] Learning RNN from scratch (RNN神经网络参数推导)

来源:程序员人生 发布时间:2016-07-05 14:08:30 阅读次数:5494次

从上1篇原创的文章到现在,已有1年多了,目前终究有1些新的总结分享个大家。

本文主要讲了RNN神经网络的基本原理,并给出了RNN神经网络参数更新的详细推导进程(back propagation),对想知道RNN的参数是如果推导的,可以仔细浏览本文。

由于时间有限,下面的总结难免有疏漏的地方,请大家指正。

本文结合了1个非常经典的RNN的例子代码,进行了详细的说明,RNN的代码和注释请见:https://github.com/weixsong/min-char-rnn

并且,本文给出了验证为何在output layer采取sigmoid激活函数的时候应当采取cross entropy error作为cost function。

本文目录:

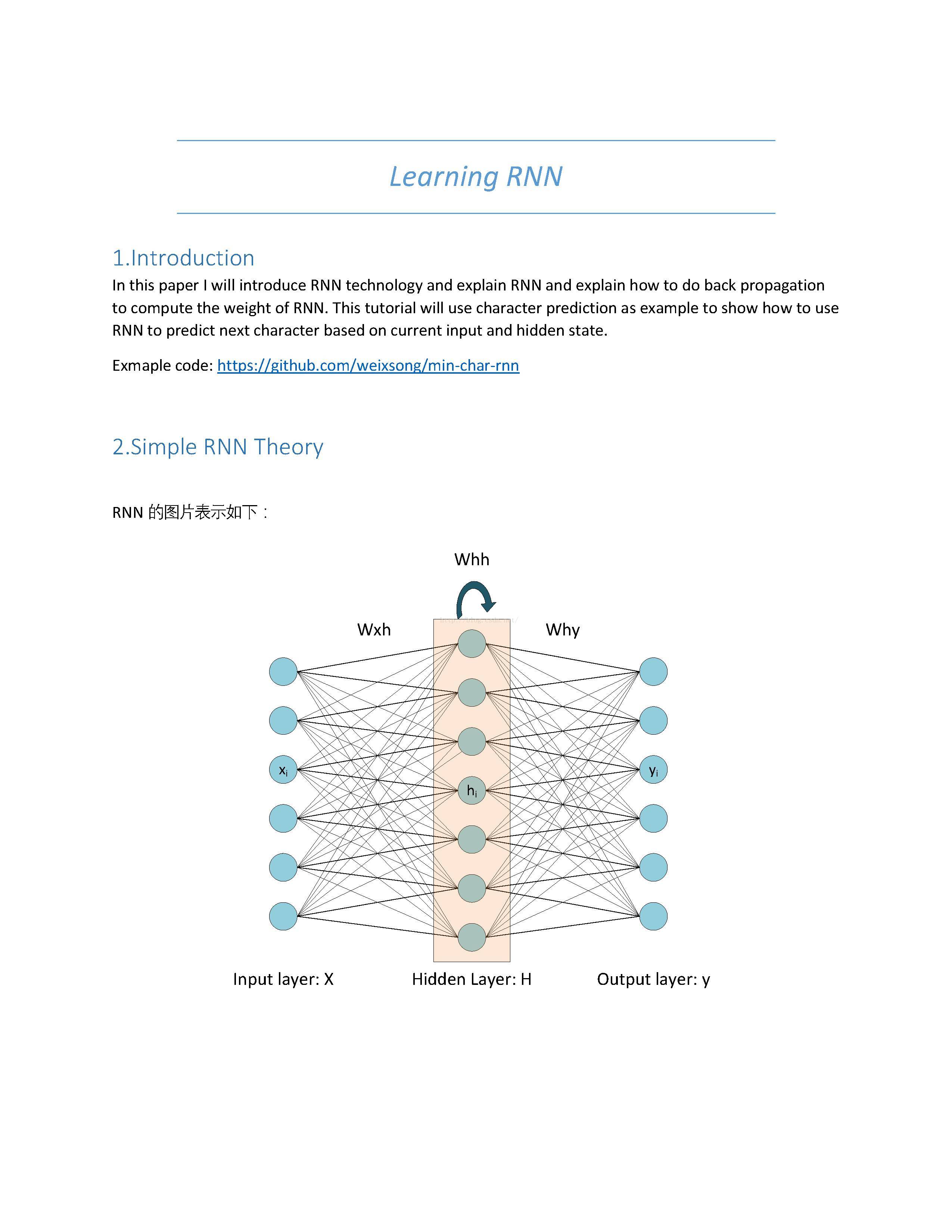

1.Introduction

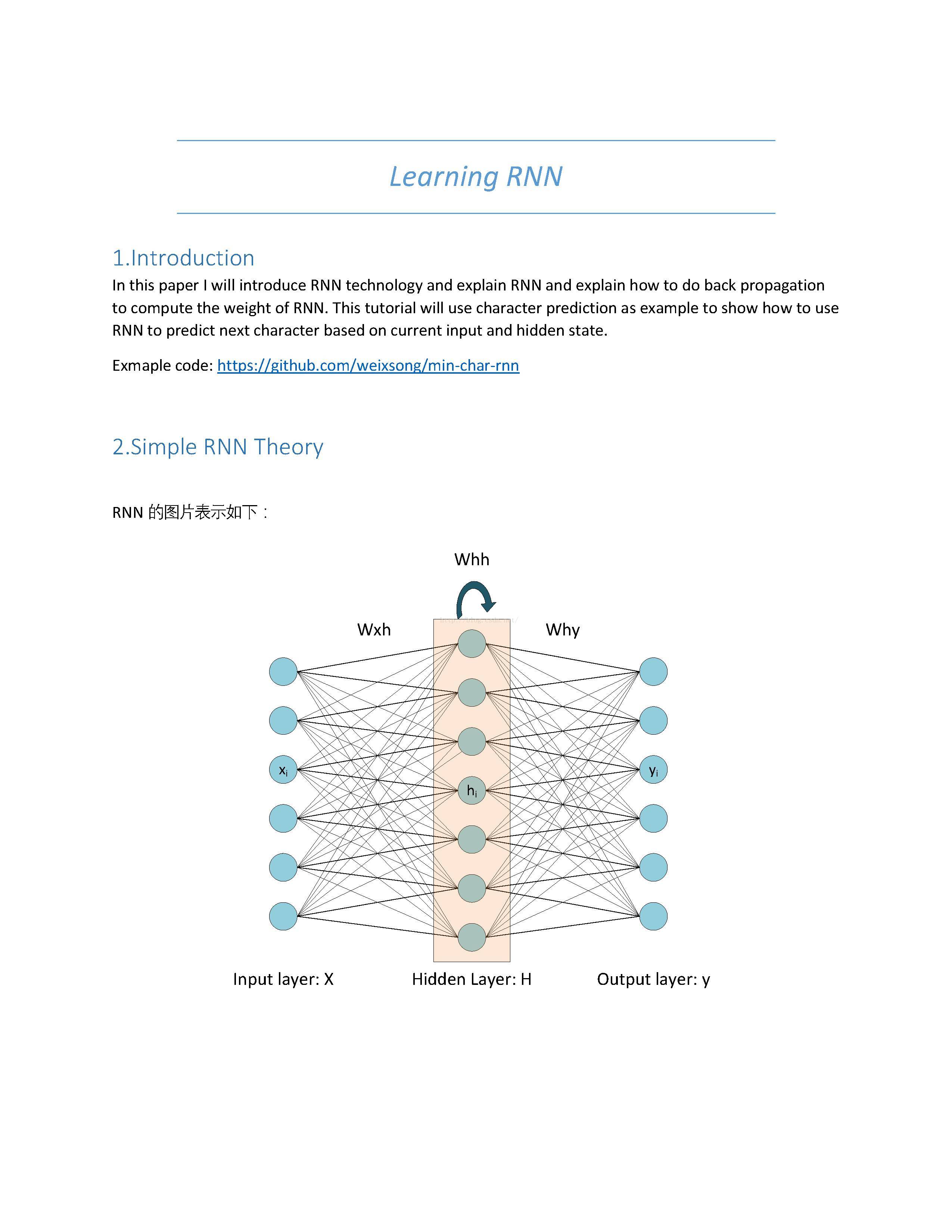

2.Simple RNN Theory

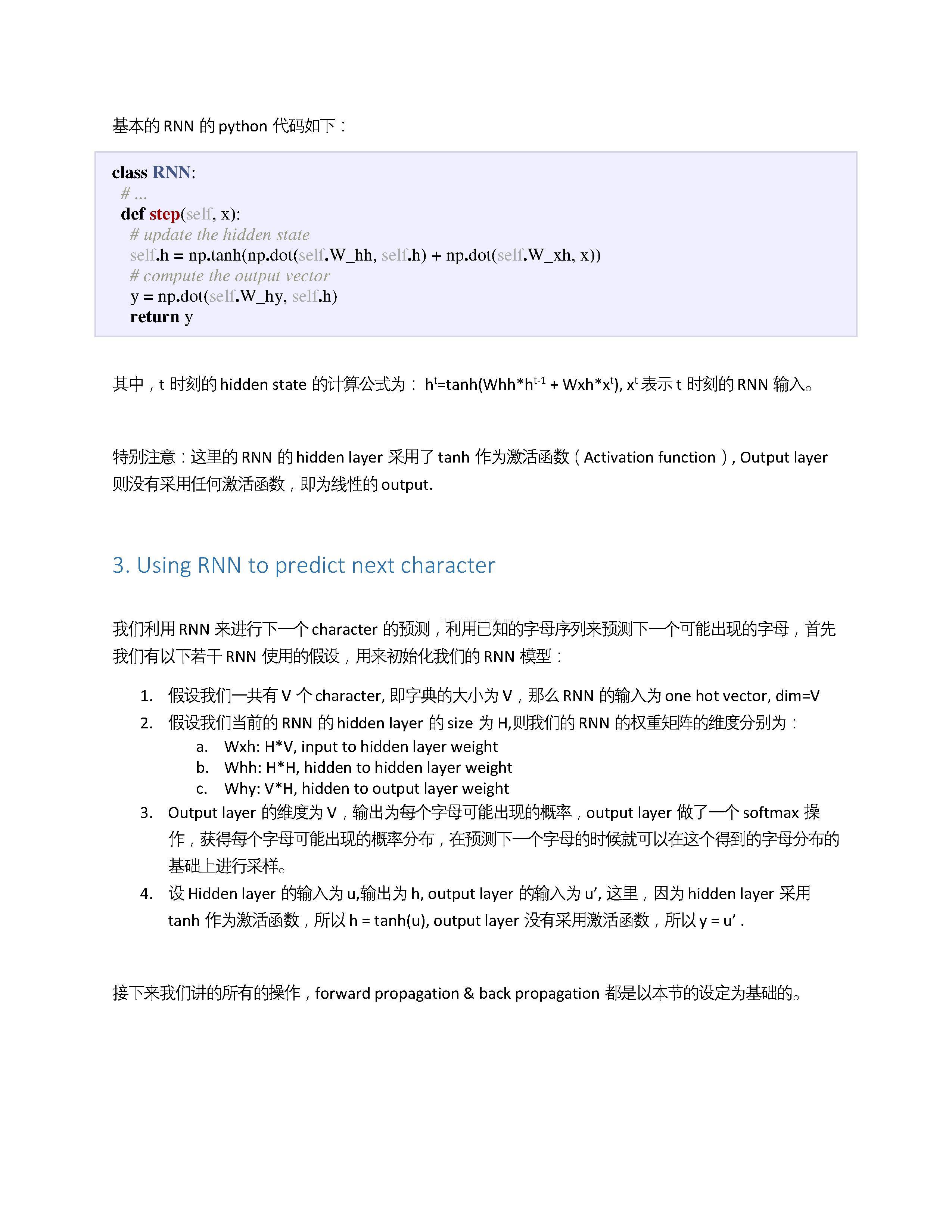

3. Using RNN to predict next character

4. Loss Function

4.1 Sum of Squared error (Quadratic error)

4.2 Cross Entropy Error

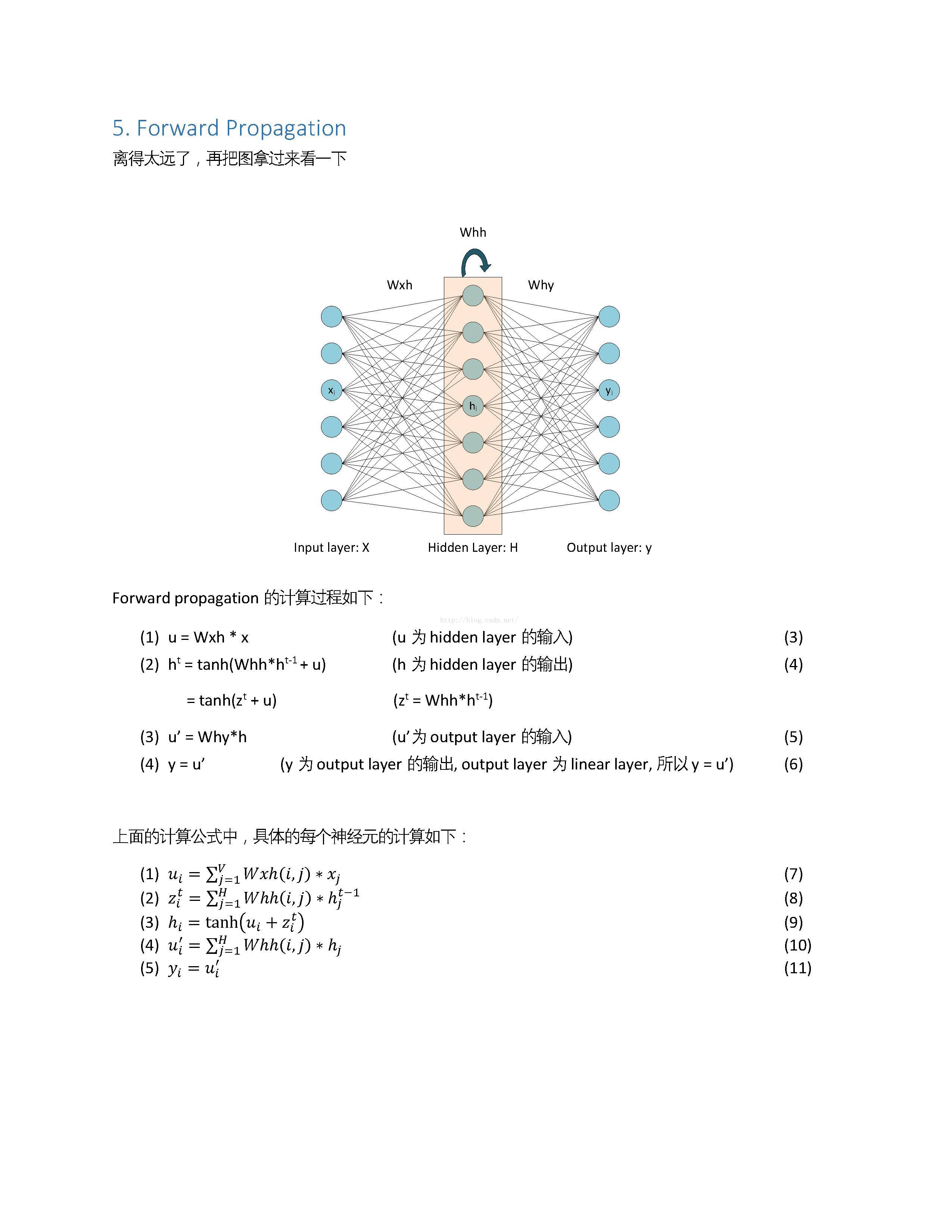

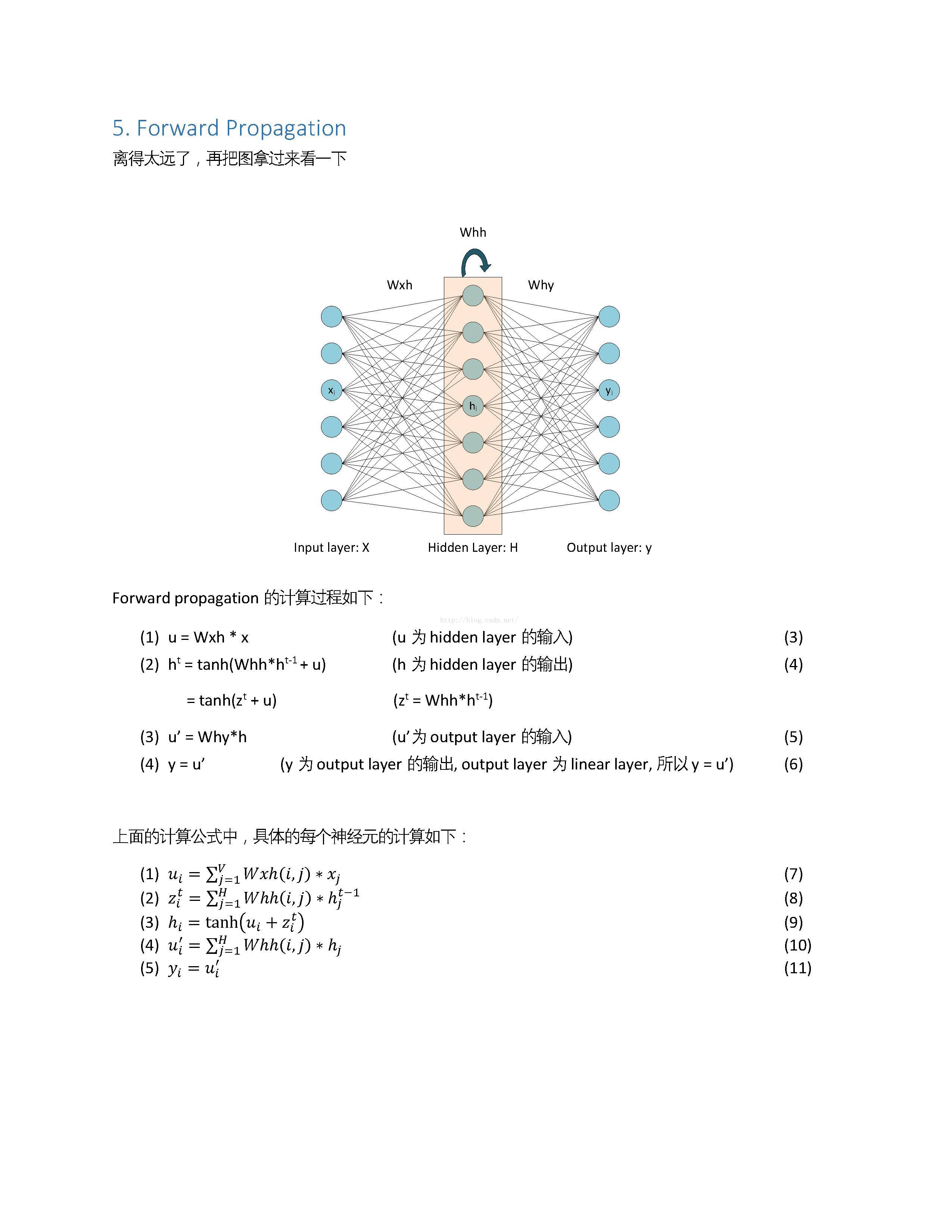

5. Forward Propagation

6. Quadratic Error VS Cross Entropy Error

6.1 Derivative of error with regard to the output of output layer

6.2 Derivative of error with regard to the input of output layer

7. Error Measure of RNN for Character Prediction

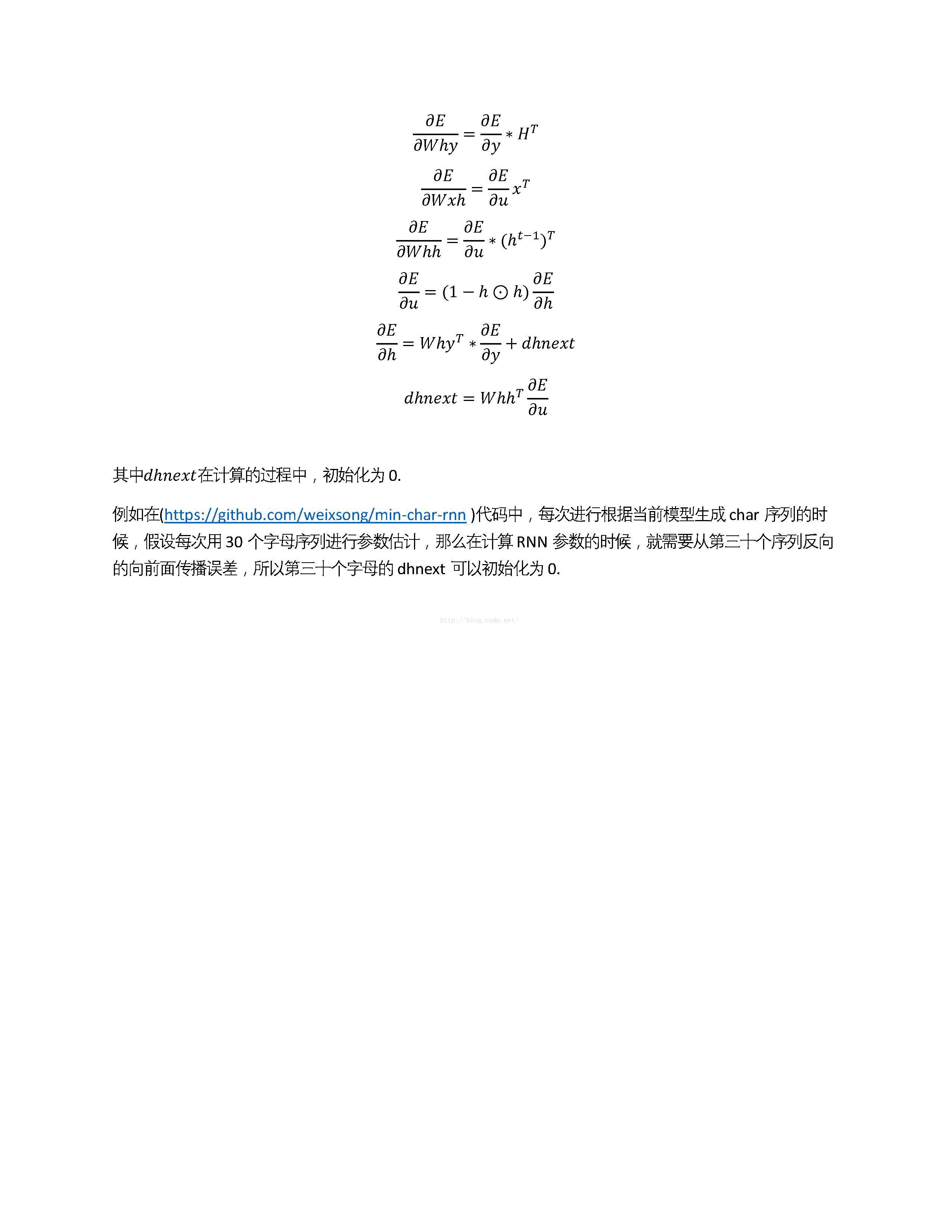

8. Back Propagation

8.1 compute the derivative of error with regard to the output of outputlayer

8.2 compute the derivative of error with regard to the input of outputlayer

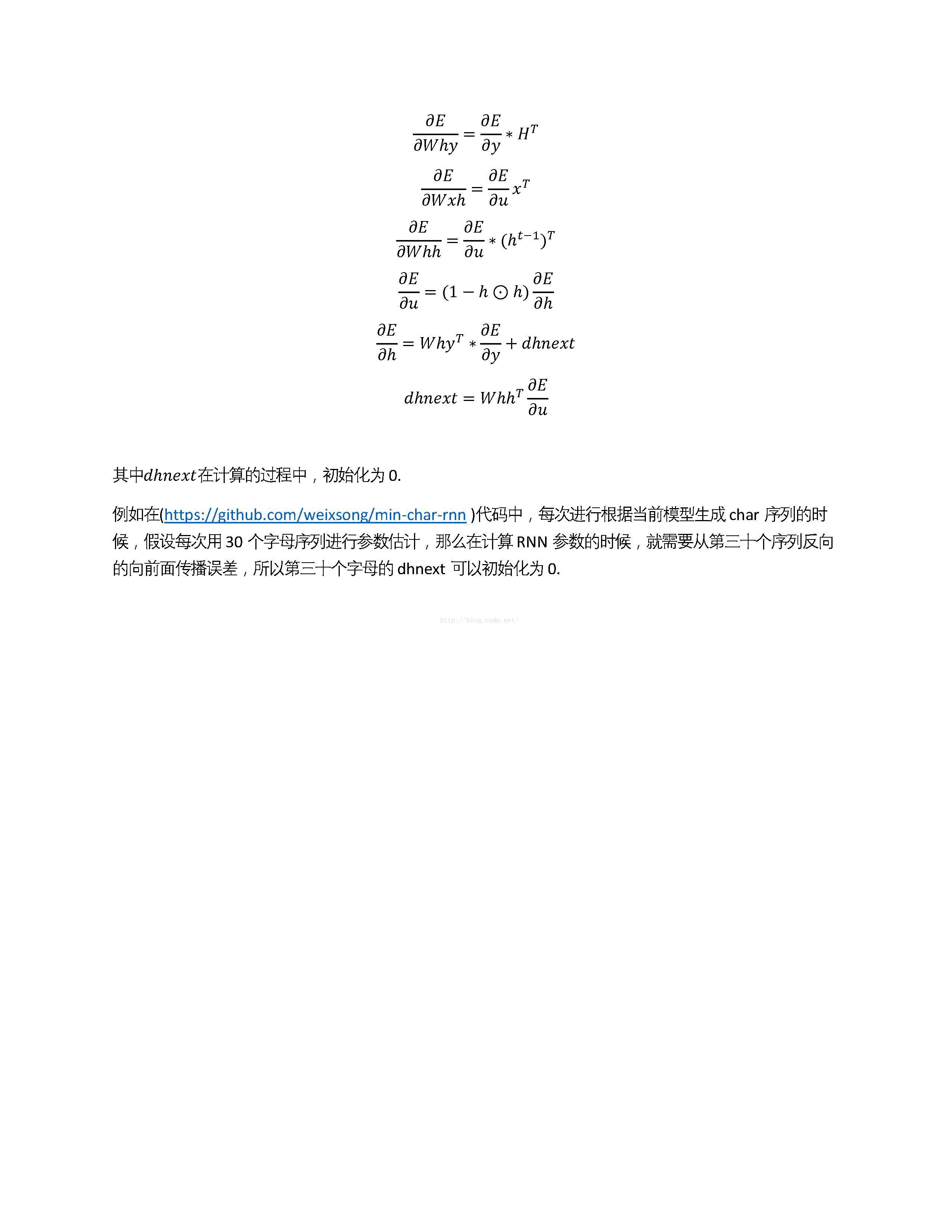

8.3 compute the derivative of error with regard to the weight betweenhidden layer and output layer

8.4 compute the derivative of error with regard to the output of hiddenlayer

8.5 Compute the derivative of error with regard to the input of hiddenlayer

8.6 compute the derivative of error with regard to the weight between inputlayer and hidden layer

8.7 compute the derivative of error with regard to the weight betweenhidden layer and hidden layer

生活不易,码农辛苦

如果您觉得本网站对您的学习有所帮助,可以手机扫描二维码进行捐赠