Android RakNet 系列之五 视频通讯 OpenCV4Android

来源:程序员人生 发布时间:2014-12-16 08:37:39 阅读次数:8269次

简介

引入OpenCV4Android的目标是在Raknet框架下解决视频通讯的问题,目前在ubuntu下已成功实现,现在把它援用到Android平台下。

OpenCV是1个基于开源发行的跨平台计算机视觉库,可以在 Windows, Android, Maemo,FreeBSD, OpenBSD, iOS,Linux 和Mac OS等平台上运行。它轻量级而且高效――由1系列 C 函数和少许 C++ 类构成,同时提供了Python、Ruby、MATLAB等语言的接口,实现了图象处理和计算机视觉方面的很多通用算法。OpenCV致力于真实世界的实时利用,通过优化的C代码的编写对其履行速度带来了可观的提升,并且可以通过购买Intel的IPP高性能多媒体函数库(Integrated Performance Primitives)得到更快的处理速度。

相干网站

http://sourceforge.net/projects/opencvlibrary/files/opencv-android/ 最新版项目源码下载

http://www.opencv.org.cn/ OpenCV中文论坛

http://opencv.org/?s=+android&x=0&y=0 OpenCV 官网

http://www.code.opencv.org/projects/opencv/issues OpenCV 官网下解决方案

http://docs.opencv.org/platforms/android/service/doc/index.html 或 http://docs.opencv.org/trunk/ OpenCV 官网下介绍文档

http://www.jayrambhia.com/android/ 相干博客

详情

项目介绍

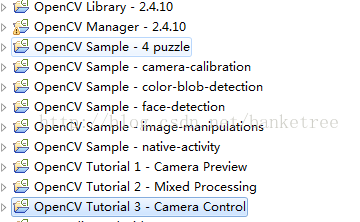

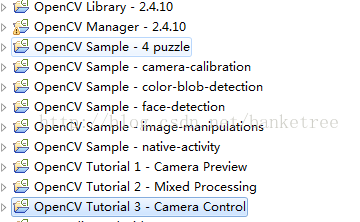

官方提供了1个库项目(OpenCV Library - 2.4.10)和9个Demo项目(OpenCV Manager - 2.4.10、OpenCV Tutorial 1 - Camera Preview、OpenCV Tutorial 2 - Mixed Processing、OpenCV Tutorial 3 - Camera Control、OpenCV Sample - 4 puzzle、OpenCV Sample - camera-calibration、OpenCV Sample - color-blob-detection、OpenCV Sample - face-detection、OpenCV Sample - image-manipulations、OpenCV Sample - native-activity、OpenCV Library - 2.4.10),再加上OpenCV Manager项目总计11个项目,项目如图:

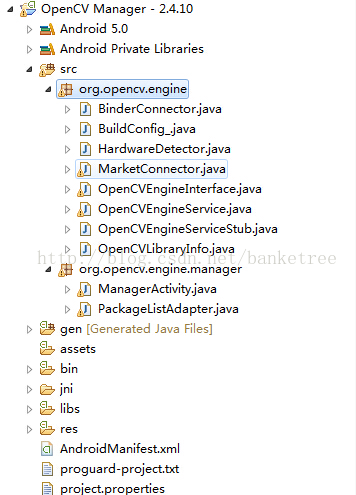

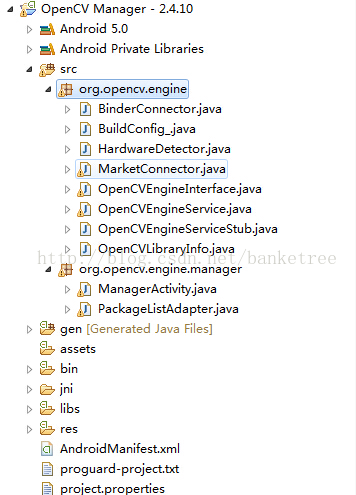

OpenCV Manager - 2.4.10

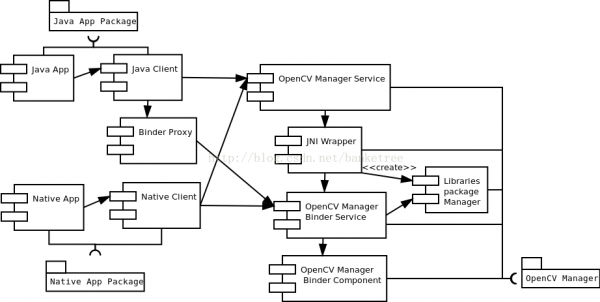

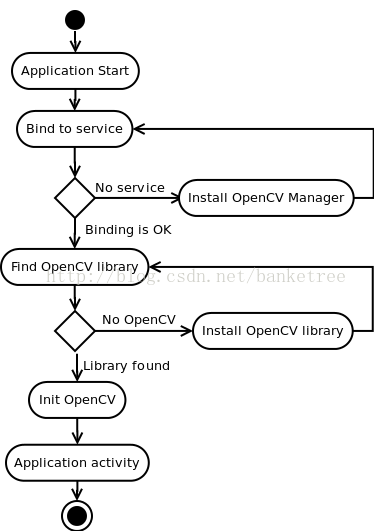

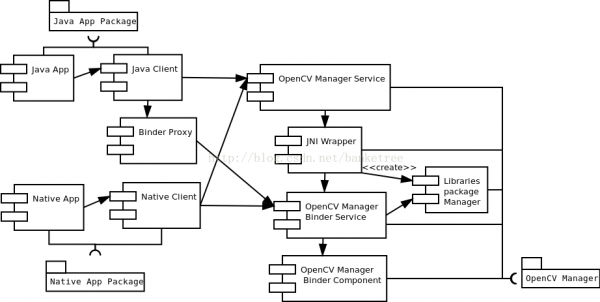

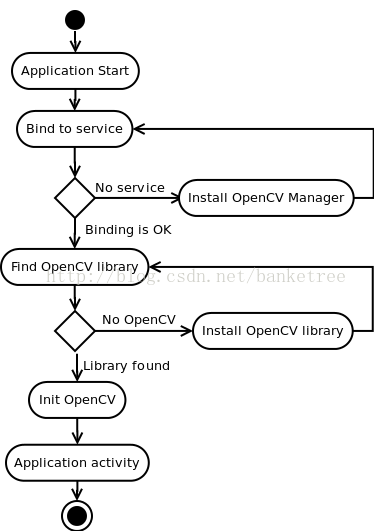

官方提供的Demo运行需要OpenCV Manager项目的支持,该项目负责匹配手机加载库文件,通过服务绑定进行通讯。

如果未安装它,并运行Demo会出现提示框:OpenCV Manager package was not found! Try to install it? 固然如果直接使用它的库运行是不会出现此情况的。缘由就是该项目只是做加载库的工作。

项目如图:

官方给出解释:

加载库的代码以下:

public class BinderConnector {

private static boolean mIsReady = false;

private MarketConnector mMarket;

static {

try {

System.loadLibrary("OpenCVEngine");

System.loadLibrary("OpenCVEngine_jni");

mIsReady = true;

} catch (UnsatisfiedLinkError localUnsatisfiedLinkError) {

mIsReady = false;

localUnsatisfiedLinkError.printStackTrace();

}

}

public BinderConnector(MarketConnector paramMarketConnector) {

this.mMarket = paramMarketConnector;

}

private native void Final();

private native boolean Init(MarketConnector paramMarketConnector);

public native IBinder Connect();

public boolean Disconnect() {

if (mIsReady)

Final();

return mIsReady;

}

public boolean Init() {

boolean bool = false;

if (mIsReady)

bool = Init(this.mMarket);

return bool;

}

}

public class HardwareDetector { //硬件匹配

public static final int ARCH_ARMv5 = 0x4000000;

public static final int ARCH_ARMv6 = 0x8000000;

public static final int ARCH_ARMv7 = 0x10000000;

public static final int ARCH_ARMv8 = 0x20000000;

public static final int ARCH_MIPS = 0x40000000;

public static final int ARCH_UNKNOWN = ⑴;

public static final int ARCH_X64 = 0x2000000;

public static final int ARCH_X86 = 0x1000000;

public static final int FEATURES_HAS_GPU = 65536;

public static final int FEATURES_HAS_NEON = 8;

public static final int FEATURES_HAS_NEON2 = 22;

public static final int FEATURES_HAS_SSE = 1;

public static final int FEATURES_HAS_SSE2 = 2;

public static final int FEATURES_HAS_SSE3 = 4;

public static final int FEATURES_HAS_VFPv3 = 2;

public static final int FEATURES_HAS_VFPv3d16 = 1;

public static final int FEATURES_HAS_VFPv4 = 4;

public static final int PLATFORM_TEGRA = 1;

public static final int PLATFORM_TEGRA2 = 2;

public static final int PLATFORM_TEGRA3 = 3;

public static final int PLATFORM_TEGRA4 = 5;

public static final int PLATFORM_TEGRA4i = 4;

public static final int PLATFORM_TEGRA5 = 6;

public static final int PLATFORM_UNKNOWN = ⑴;

public static boolean mIsReady = false;

static {

try {

System.loadLibrary("OpenCVEngine");

System.loadLibrary("OpenCVEngine_jni");

mIsReady = true;

} catch (UnsatisfiedLinkError localUnsatisfiedLinkError) {

mIsReady = false;

localUnsatisfiedLinkError.printStackTrace();

}

}

public static native int DetectKnownPlatforms();

public static native int GetCpuID();

public static native String GetPlatformName();

public static native int GetProcessorCount();

}

public class OpenCVLibraryInfo {

private String mLibraryList;

private long mNativeObj;

private String mPackageName;

private String mVersionName;

public OpenCVLibraryInfo(String paramString) {

mNativeObj = open(paramString + "/libopencv_info.so");

if (this.mNativeObj == 0L)

return;

this.mPackageName = getPackageName(this.mNativeObj);

this.mLibraryList = getLibraryList(this.mNativeObj);

this.mVersionName = getVersionName(this.mNativeObj);

close(this.mNativeObj);

}

private native void close(long paramLong);

private native String getLibraryList(long paramLong);

private native String getPackageName(long paramLong);

private native String getVersionName(long paramLong);

private native long open(String paramString);

public String libraryList() {

return this.mLibraryList;

}

public String packageName() {

return this.mPackageName;

}

public boolean status() {

if (mNativeObj == 0L || mLibraryList.length() == 0)

return false;

return true;

}

public String versionName() {

return this.mVersionName;

}

}

//关键服务 demo中绑定的服务就该服务 该服务就是匹配手机加载库

public class OpenCVEngineService extends Service {

private static final String TAG = "OpenCVEngine/Service";

private IBinder mEngineInterface = null;

private MarketConnector mMarket;

private BinderConnector mNativeBinder;

public void OnDestroy() {

Log.i("OpenCVEngine/Service", "OpenCV Engine service destruction");

this.mNativeBinder.Disconnect();

}

public IBinder onBind(Intent paramIntent) {

Log.i("OpenCVEngine/Service", "Service onBind called for intent "

+ paramIntent.toString());

return this.mEngineInterface;

}

public void onCreate() {

Log.i("OpenCVEngine/Service", "Service starting");

super.onCreate();

Log.i("OpenCVEngine/Service", "Engine binder component creating");

this.mMarket = new MarketConnector(getBaseContext());

this.mNativeBinder = new BinderConnector(this.mMarket);

if (this.mNativeBinder.Init()) {

this.mEngineInterface = this.mNativeBinder.Connect();

Log.i("OpenCVEngine/Service", "Service started successfully");

}

else {

Log.e("OpenCVEngine/Service",

"Cannot initialize native part of OpenCV Manager!");

Log.e("OpenCVEngine/Service", "Using stub instead");

return;

}

this.mEngineInterface = new OpenCVEngineInterface(this);

}

public boolean onUnbind(Intent paramIntent) {

Log.i("OpenCVEngine/Service", "Service onUnbind called for intent "

+ paramIntent.toString());

return true;

}

}

它的AndroidManifest.xml 以下:

<?xml version="1.0" encoding="utf⑻"?>

<manifest android:versionCode="2191" android:versionName="2.19" package="org.opencv.engine"

xmlns:android="http://schemas.android.com/apk/res/android">

<uses-feature android:name="android.hardware.touchscreen" android:required="false" />

<application android:label="@string/app_name" android:icon="@drawable/icon">

<service android:name="OpenCVEngineService" android:exported="true" android:process=":OpenCVEngineProcess">

<intent-filter>

<action android:name="org.opencv.engine.BIND" />

</intent-filter>

</service>

<activity android:label="@string/app_name" android:name="org.opencv.engine.manager.ManagerActivity" android:screenOrientation="portrait">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

OpenCV Tutorial 1 - Camera Preview

Demo就1个文件,使用摄像机预览,Demo中提供了两种方法,1种是Java层调用,1种是Native层调用。

如图:

关键控件以下:

<org.opencv.android.JavaCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:visibility="gone"

android:id="@+id/tutorial1_activity_java_surface_view"

opencv:show_fps="true"

opencv:camera_id="any" />

<org.opencv.android.NativeCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:visibility="gone"

android:id="@+id/tutorial1_activity_native_surface_view"

opencv:show_fps="true"

opencv:camera_id="any" />

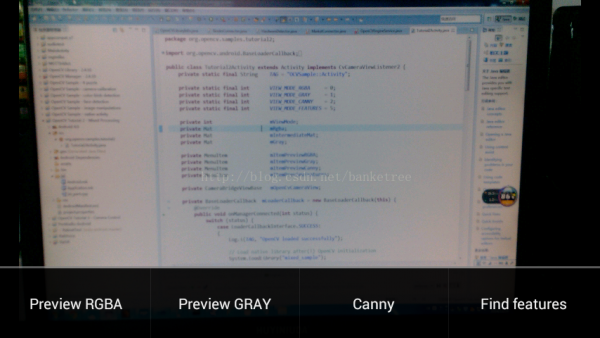

OpenCV Tutorial 2 - Mixed Processing

该Demo就1个Java文件和1个C++文件,实现4中混合处理。

如图:

本地代码以下:

JNIEXPORT void JNICALL Java_org_opencv_samples_tutorial2_Tutorial2Activity_FindFeatures(JNIEnv*, jobject, jlong addrGray, jlong addrRgba)

{

Mat& mGr = *(Mat*)addrGray;

Mat& mRgb = *(Mat*)addrRgba;

vector<KeyPoint> v;

FastFeatureDetector detector(50);

detector.detect(mGr, v);

for( unsigned int i = 0; i < v.size(); i++ )

{

const KeyPoint& kp = v[i];

circle(mRgb, Point(kp.pt.x, kp.pt.y), 10, Scalar(255,0,0,255));

}

}

使用的控件以下:

<org.opencv.android.JavaCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:id="@+id/tutorial2_activity_surface_view" />

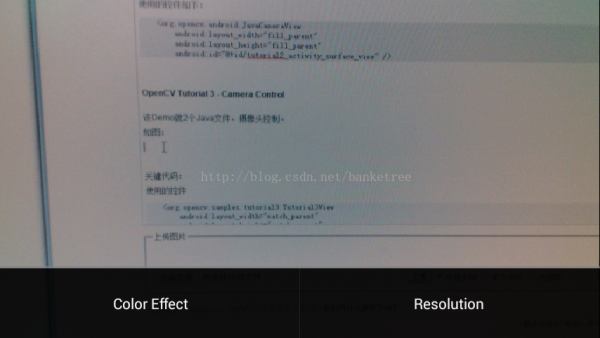

OpenCV Tutorial 3 - Camera Control

该Demo就2个Java文件,摄像头控制,控制色彩显示、摄像分辨率。

效果如图:

关键代码:

使用的控件

<org.opencv.samples.tutorial3.Tutorial3View

android:layout_width="match_parent"

android:layout_height="match_parent"

android:visibility="gone"

android:id="@+id/tutorial3_activity_java_surface_view" />

public class Tutorial3View extends JavaCameraView implements PictureCallback {

private static final String TAG = "Sample::Tutorial3View";

private String mPictureFileName;

public Tutorial3View(Context context, AttributeSet attrs) {

super(context, attrs);

}

public List<String> getEffectList() {

return mCamera.getParameters().getSupportedColorEffects();

}

public boolean isEffectSupported() {

return (mCamera.getParameters().getColorEffect() != null);

}

public String getEffect() {

return mCamera.getParameters().getColorEffect();

}

public void setEffect(String effect) {

Camera.Parameters params = mCamera.getParameters();

params.setColorEffect(effect);

mCamera.setParameters(params);

}

public List<Size> getResolutionList() {

return mCamera.getParameters().getSupportedPreviewSizes();

}

public void setResolution(Size resolution) {

disconnectCamera();

mMaxHeight = resolution.height;

mMaxWidth = resolution.width;

connectCamera(getWidth(), getHeight());

}

public Size getResolution() {

return mCamera.getParameters().getPreviewSize();

}

public void takePicture(final String fileName) {

Log.i(TAG, "Taking picture");

this.mPictureFileName = fileName;

// Postview and jpeg are sent in the same buffers if the queue is not empty when performing a capture.

// Clear up buffers to avoid mCamera.takePicture to be stuck because of a memory issue

mCamera.setPreviewCallback(null);

// PictureCallback is implemented by the current class

mCamera.takePicture(null, null, this);

}

@Override

public void onPictureTaken(byte[] data, Camera camera) {

Log.i(TAG, "Saving a bitmap to file");

// The camera preview was automatically stopped. Start it again.

mCamera.startPreview();

mCamera.setPreviewCallback(this);

// Write the image in a file (in jpeg format)

try {

FileOutputStream fos = new FileOutputStream(mPictureFileName);

fos.write(data);

fos.close();

} catch (java.io.IOException e) {

Log.e("PictureDemo", "Exception in photoCallback", e);

}

}

}

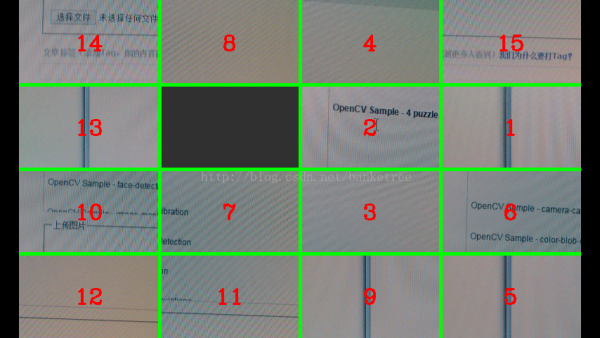

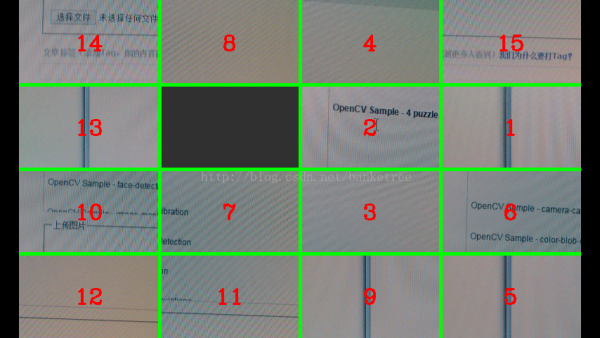

OpenCV Sample - 4 puzzle

该Demo就两个Java文件,实现摄像格式错乱输出。

效果如图:

主要代码以下:

public class Puzzle15Processor { //摄像图片转换

private static final int GRID_SIZE = 4;

private static final int GRID_AREA = GRID_SIZE * GRID_SIZE;

private static final int GRID_EMPTY_INDEX = GRID_AREA - 1;

private static final String TAG = "Puzzle15Processor";

private static final Scalar GRID_EMPTY_COLOR = new Scalar(0x33, 0x33, 0x33, 0xFF);

private int[] mIndexes;

private int[] mTextWidths;

private int[] mTextHeights;

private Mat mRgba15;

private Mat[] mCells15;

private boolean mShowTileNumbers = true;

public Puzzle15Processor() {

mTextWidths = new int[GRID_AREA];

mTextHeights = new int[GRID_AREA];

mIndexes = new int [GRID_AREA];

for (int i = 0; i < GRID_AREA; i++)

mIndexes[i] = i;

}

/* this method is intended to make processor prepared for a new game */

public synchronized void prepareNewGame() {

do {

shuffle(mIndexes);

} while (!isPuzzleSolvable());

}

/* This method is to make the processor know the size of the frames that

* will be delivered via puzzleFrame.

* If the frames will be different size - then the result is unpredictable

*/

public synchronized void prepareGameSize(int width, int height) {

mRgba15 = new Mat(height, width, CvType.CV_8UC4);

mCells15 = new Mat[GRID_AREA];

for (int i = 0; i < GRID_SIZE; i++) {

for (int j = 0; j < GRID_SIZE; j++) {

int k = i * GRID_SIZE + j;

mCells15[k] = mRgba15.submat(i * height / GRID_SIZE, (i + 1) * height / GRID_SIZE, j * width / GRID_SIZE, (j + 1) * width / GRID_SIZE);

}

}

for (int i = 0; i < GRID_AREA; i++) {

Size s = Core.getTextSize(Integer.toString(i + 1), 3/* CV_FONT_HERSHEY_COMPLEX */, 1, 2, null);

mTextHeights[i] = (int) s.height;

mTextWidths[i] = (int) s.width;

}

}

/* this method to be called from the outside. it processes the frame and shuffles

* the tiles as specified by mIndexes array

*/

public synchronized Mat puzzleFrame(Mat inputPicture) {

Mat[] cells = new Mat[GRID_AREA];

int rows = inputPicture.rows();

int cols = inputPicture.cols();

rows = rows - rows%4;

cols = cols - cols%4;

for (int i = 0; i < GRID_SIZE; i++) {

for (int j = 0; j < GRID_SIZE; j++) {

int k = i * GRID_SIZE + j;

cells[k] = inputPicture.submat(i * inputPicture.rows() / GRID_SIZE, (i + 1) * inputPicture.rows() / GRID_SIZE, j * inputPicture.cols()/ GRID_SIZE, (j + 1) * inputPicture.cols() / GRID_SIZE);

}

}

rows = rows - rows%4;

cols = cols - cols%4;

// copy shuffled tiles

for (int i = 0; i < GRID_AREA; i++) {

int idx = mIndexes[i];

if (idx == GRID_EMPTY_INDEX)

mCells15[i].setTo(GRID_EMPTY_COLOR);

else {

cells[idx].copyTo(mCells15[i]);

if (mShowTileNumbers) {

Core.putText(mCells15[i], Integer.toString(1 + idx), new Point((cols / GRID_SIZE - mTextWidths[idx]) / 2,

(rows / GRID_SIZE + mTextHeights[idx]) / 2), 3/* CV_FONT_HERSHEY_COMPLEX */, 1, new Scalar(255, 0, 0, 255), 2);

}

}

}

for (int i = 0; i < GRID_AREA; i++)

cells[i].release();

drawGrid(cols, rows, mRgba15);

return mRgba15;

}

public void toggleTileNumbers() {

mShowTileNumbers = !mShowTileNumbers;

}

public void deliverTouchEvent(int x, int y) {

int rows = mRgba15.rows();

int cols = mRgba15.cols();

int row = (int) Math.floor(y * GRID_SIZE / rows);

int col = (int) Math.floor(x * GRID_SIZE / cols);

if (row < 0 || row >= GRID_SIZE || col < 0 || col >= GRID_SIZE) {

Log.e(TAG, "It is not expected to get touch event outside of picture");

return ;

}

int idx = row * GRID_SIZE + col;

int idxtoswap = ⑴;

// left

if (idxtoswap < 0 && col > 0)

if (mIndexes[idx - 1] == GRID_EMPTY_INDEX)

idxtoswap = idx - 1;

// right

if (idxtoswap < 0 && col < GRID_SIZE - 1)

if (mIndexes[idx + 1] == GRID_EMPTY_INDEX)

idxtoswap = idx + 1;

// top

if (idxtoswap < 0 && row > 0)

if (mIndexes[idx - GRID_SIZE] == GRID_EMPTY_INDEX)

idxtoswap = idx - GRID_SIZE;

// bottom

if (idxtoswap < 0 && row < GRID_SIZE - 1)

if (mIndexes[idx + GRID_SIZE] == GRID_EMPTY_INDEX)

idxtoswap = idx + GRID_SIZE;

// swap

if (idxtoswap >= 0) {

synchronized (this) {

int touched = mIndexes[idx];

mIndexes[idx] = mIndexes[idxtoswap];

mIndexes[idxtoswap] = touched;

}

}

}

private void drawGrid(int cols, int rows, Mat drawMat) {

for (int i = 1; i < GRID_SIZE; i++) {

Core.line(drawMat, new Point(0, i * rows / GRID_SIZE), new Point(cols, i * rows / GRID_SIZE), new Scalar(0, 255, 0, 255), 3);

Core.line(drawMat, new Point(i * cols / GRID_SIZE, 0), new Point(i * cols / GRID_SIZE, rows), new Scalar(0, 255, 0, 255), 3);

}

}

private static void shuffle(int[] array) {

for (int i = array.length; i > 1; i--) {

int temp = array[i - 1];

int randIx = (int) (Math.random() * i);

array[i - 1] = array[randIx];

array[randIx] = temp;

}

}

private boolean isPuzzleSolvable() {

int sum = 0;

for (int i = 0; i < GRID_AREA; i++) {

if (mIndexes[i] == GRID_EMPTY_INDEX)

sum += (i / GRID_SIZE) + 1;

else {

int smaller = 0;

for (int j = i + 1; j < GRID_AREA; j++) {

if (mIndexes[j] < mIndexes[i])

smaller++;

}

sum += smaller;

}

}

return sum % 2 == 0;

}

}

OpenCV Sample - camera-calibration

该Demo就4个Java文件,实现摄像校准。

效果如图:

使用的控件:

<org.opencv.android.JavaCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:id="@+id/camera_calibration_java_surface_view" />

OpenCV Sample - color-blob-detection

该Demo就两个Java文件,实现色斑检测。

效果如图:

使用的控件:

<org.opencv.android.JavaCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:id="@+id/color_blob_detection_activity_surface_view" />

public class ColorBlobDetector { //匹配

// Lower and Upper bounds for range checking in HSV color space

private Scalar mLowerBound = new Scalar(0);

private Scalar mUpperBound = new Scalar(0);

// Minimum contour area in percent for contours filtering

private static double mMinContourArea = 0.1;

// Color radius for range checking in HSV color space

private Scalar mColorRadius = new Scalar(25,50,50,0);

private Mat mSpectrum = new Mat();

private List<MatOfPoint> mContours = new ArrayList<MatOfPoint>();

// Cache

Mat mPyrDownMat = new Mat();

Mat mHsvMat = new Mat();

Mat mMask = new Mat();

Mat mDilatedMask = new Mat();

Mat mHierarchy = new Mat();

public void setColorRadius(Scalar radius) {

mColorRadius = radius;

}

public void setHsvColor(Scalar hsvColor) {

double minH = (hsvColor.val[0] >= mColorRadius.val[0]) ? hsvColor.val[0]-mColorRadius.val[0] : 0;

double maxH = (hsvColor.val[0]+mColorRadius.val[0] <= 255) ? hsvColor.val[0]+mColorRadius.val[0] : 255;

mLowerBound.val[0] = minH;

mUpperBound.val[0] = maxH;

mLowerBound.val[1] = hsvColor.val[1] - mColorRadius.val[1];

mUpperBound.val[1] = hsvColor.val[1] + mColorRadius.val[1];

mLowerBound.val[2] = hsvColor.val[2] - mColorRadius.val[2];

mUpperBound.val[2] = hsvColor.val[2] + mColorRadius.val[2];

mLowerBound.val[3] = 0;

mUpperBound.val[3] = 255;

Mat spectrumHsv = new Mat(1, (int)(maxH-minH), CvType.CV_8UC3);

for (int j = 0; j < maxH-minH; j++) {

byte[] tmp = {(byte)(minH+j), (byte)255, (byte)255};

spectrumHsv.put(0, j, tmp);

}

Imgproc.cvtColor(spectrumHsv, mSpectrum, Imgproc.COLOR_HSV2RGB_FULL, 4);

}

public Mat getSpectrum() {

return mSpectrum;

}

public void setMinContourArea(double area) {

mMinContourArea = area;

}

public void process(Mat rgbaImage) {

Imgproc.pyrDown(rgbaImage, mPyrDownMat);

Imgproc.pyrDown(mPyrDownMat, mPyrDownMat);

Imgproc.cvtColor(mPyrDownMat, mHsvMat, Imgproc.COLOR_RGB2HSV_FULL);

Core.inRange(mHsvMat, mLowerBound, mUpperBound, mMask);

Imgproc.dilate(mMask, mDilatedMask, new Mat());

List<MatOfPoint> contours = new ArrayList<MatOfPoint>();

Imgproc.findContours(mDilatedMask, contours, mHierarchy, Imgproc.RETR_EXTERNAL, Imgproc.CHAIN_APPROX_SIMPLE);

// Find max contour area

double maxArea = 0;

Iterator<MatOfPoint> each = contours.iterator();

while (each.hasNext()) {

MatOfPoint wrapper = each.next();

double area = Imgproc.contourArea(wrapper);

if (area > maxArea)

maxArea = area;

}

// Filter contours by area and resize to fit the original image size

mContours.clear();

each = contours.iterator();

while (each.hasNext()) {

MatOfPoint contour = each.next();

if (Imgproc.contourArea(contour) > mMinContourArea*maxArea) {

Core.multiply(contour, new Scalar(4,4), contour);

mContours.add(contour);

}

}

}

public List<MatOfPoint> getContours() {

return mContours;

}

}

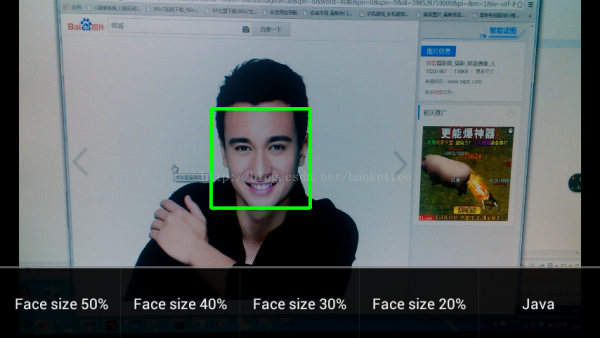

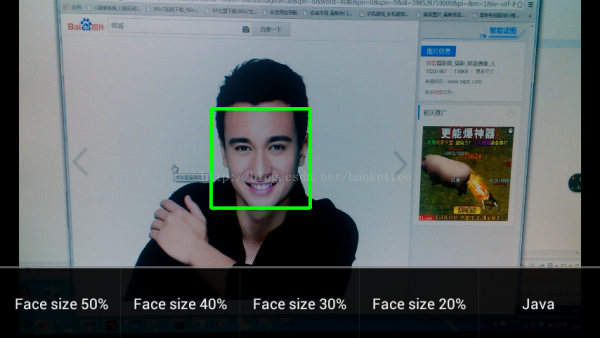

OpenCV Sample - face-detection

该Demo就两个Java文件和两个C++文件,实现匹配脸。

效果如图:

使用的控件:

<org.opencv.android.JavaCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:id="@+id/fd_activity_surface_view" />

public class DetectionBasedTracker

{

public DetectionBasedTracker(String cascadeName, int minFaceSize) {

mNativeObj = nativeCreateObject(cascadeName, minFaceSize);

}

public void start() {

nativeStart(mNativeObj);

}

public void stop() {

nativeStop(mNativeObj);

}

public void setMinFaceSize(int size) {

nativeSetFaceSize(mNativeObj, size);

}

public void detect(Mat imageGray, MatOfRect faces) {

nativeDetect(mNativeObj, imageGray.getNativeObjAddr(), faces.getNativeObjAddr());

}

public void release() {

nativeDestroyObject(mNativeObj);

mNativeObj = 0;

}

private long mNativeObj = 0;

private static native long nativeCreateObject(String cascadeName, int minFaceSize);

private static native void nativeDestroyObject(long thiz);

private static native void nativeStart(long thiz);

private static native void nativeStop(long thiz);

private static native void nativeSetFaceSize(long thiz, int size);

private static native void nativeDetect(long thiz, long inputImage, long faces);

}

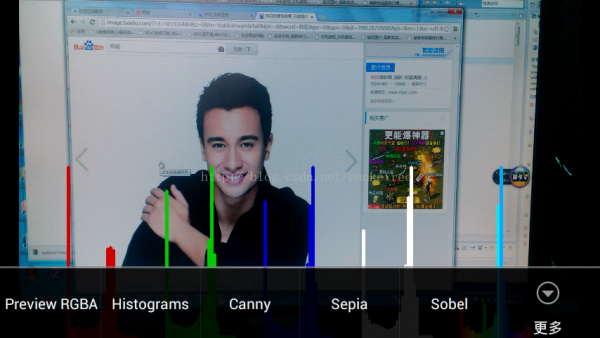

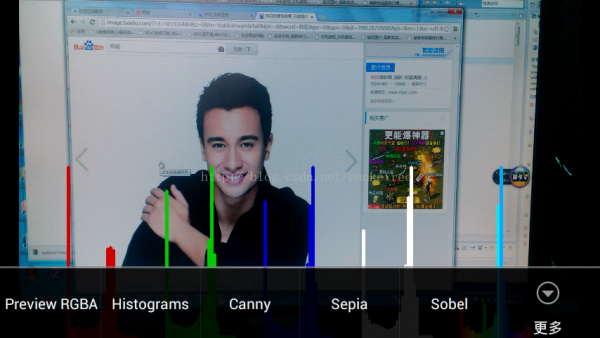

OpenCV Sample - image-manipulations

该Demo就1个Java文件,实现图片操作。

效果如图:

使用的控件:

<org.opencv.android.JavaCameraView

android:layout_width="fill_parent"

android:layout_height="fill_parent"

android:id="@+id/image_manipulations_activity_surface_view" />

实现CvCameraViewListener2接口,从而操作图片。

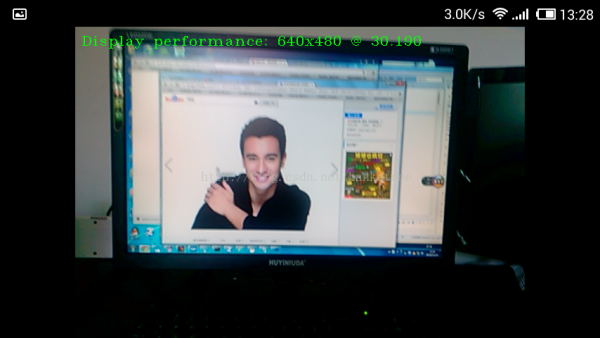

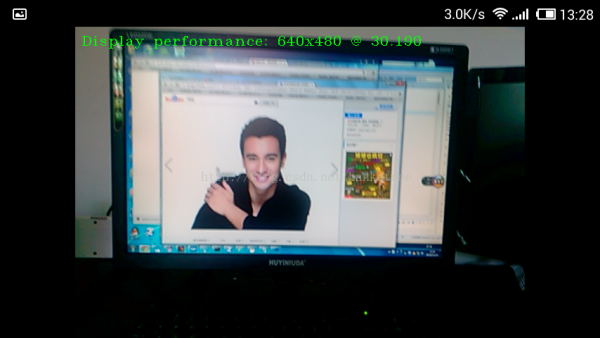

OpenCV Sample - native-activity

该Demo就1个Java文件和1个C++文件,实现本地界面显示图片。

效果如图:

本地代码以下:

struct Engine

{

android_app* app;

cv::Ptr<cv::VideoCapture> capture;

};

static cv::Size calc_optimal_camera_resolution(const char* supported, int width, int height)

{

int frame_width = 0;

int frame_height = 0;

size_t prev_idx = 0;

size_t idx = 0;

float min_diff = FLT_MAX;

do

{

int tmp_width;

int tmp_height;

prev_idx = idx;

while ((supported[idx] != '�') && (supported[idx] != ','))

idx++;

sscanf(&supported[prev_idx], "%dx%d", &tmp_width, &tmp_height);

int w_diff = width - tmp_width;

int h_diff = height - tmp_height;

if ((h_diff >= 0) && (w_diff >= 0))

{

if ((h_diff <= min_diff) && (tmp_height <= 720))

{

frame_width = tmp_width;

frame_height = tmp_height;

min_diff = h_diff;

}

}

idx++; // to skip comma symbol

} while(supported[idx⑴] != '�');

return cv::Size(frame_width, frame_height);

}

static void engine_draw_frame(Engine* engine, const cv::Mat& frame)

{

if (engine->app->window == NULL)

return; // No window.

ANativeWindow_Buffer buffer;

if (ANativeWindow_lock(engine->app->window, &buffer, NULL) < 0)

{

LOGW("Unable to lock window buffer");

return;

}

int32_t* pixels = (int32_t*)buffer.bits;

int left_indent = (buffer.width-frame.cols)/2;

int top_indent = (buffer.height-frame.rows)/2;

if (top_indent > 0)

{

memset(pixels, 0, top_indent*buffer.stride*sizeof(int32_t));

pixels += top_indent*buffer.stride;

}

for (int yy = 0; yy < frame.rows; yy++)

{

if (left_indent > 0)

{

memset(pixels, 0, left_indent*sizeof(int32_t));

memset(pixels+left_indent+frame.cols, 0, (buffer.stride-frame.cols-left_indent)*sizeof(int32_t));

}

int32_t* line = pixels + left_indent;

size_t line_size = frame.cols*4*sizeof(unsigned char);

memcpy(line, frame.ptr<unsigned char>(yy), line_size);

// go to next line

pixels += buffer.stride;

}

ANativeWindow_unlockAndPost(engine->app->window);

}

static void engine_handle_cmd(android_app* app, int32_t cmd)

{

Engine* engine = (Engine*)app->userData;

switch (cmd)

{

case APP_CMD_INIT_WINDOW:

if (app->window != NULL)

{

LOGI("APP_CMD_INIT_WINDOW");

engine->capture = new cv::VideoCapture(0);

union {double prop; const char* name;} u;

u.prop = engine->capture->get(CV_CAP_PROP_SUPPORTED_PREVIEW_SIZES_STRING);

int view_width = ANativeWindow_getWidth(app->window);

int view_height = ANativeWindow_getHeight(app->window);

cv::Size camera_resolution;

if (u.name)

camera_resolution = calc_optimal_camera_resolution(u.name, 640, 480);

else

{

LOGE("Cannot get supported camera camera_resolutions");

camera_resolution = cv::Size(ANativeWindow_getWidth(app->window),

ANativeWindow_getHeight(app->window));

}

if ((camera_resolution.width != 0) && (camera_resolution.height != 0))

{

engine->capture->set(CV_CAP_PROP_FRAME_WIDTH, camera_resolution.width);

engine->capture->set(CV_CAP_PROP_FRAME_HEIGHT, camera_resolution.height);

}

float scale = std::min((float)view_width/camera_resolution.width,

(float)view_height/camera_resolution.height);

if (ANativeWindow_setBuffersGeometry(app->window, (int)(view_width/scale),

int(view_height/scale), WINDOW_FORMAT_RGBA_8888) < 0)

{

LOGE("Cannot set pixel format!");

return;

}

LOGI("Camera initialized at resolution %dx%d", camera_resolution.width, camera_resolution.height);

}

break;

case APP_CMD_TERM_WINDOW:

LOGI("APP_CMD_TERM_WINDOW");

engine->capture->release();

break;

}

}

void android_main(android_app* app)

{

Engine engine;

// Make sure glue isn't stripped.

app_dummy();

size_t engine_size = sizeof(engine); // for Eclipse CDT parser

memset((void*)&engine, 0, engine_size);

app->userData = &engine;

app->onAppCmd = engine_handle_cmd;

engine.app = app;

float fps = 0;

cv::Mat drawing_frame;

std::queue<int64> time_queue;

// loop waiting for stuff to do.

while (1)

{

// Read all pending events.

int ident;

int events;

android_poll_source* source;

// Process system events

while ((ident=ALooper_pollAll(0, NULL, &events, (void**)&source)) >= 0)

{

// Process this event.

if (source != NULL)

{

source->process(app, source);

}

// Check if we are exiting.

if (app->destroyRequested != 0)

{

LOGI("Engine thread destroy requested!");

return;

}

}

int64 then;

int64 now = cv::getTickCount();

time_queue.push(now);

// Capture frame from camera and draw it

if (!engine.capture.empty())

{

if (engine.capture->grab())

engine.capture->retrieve(drawing_frame, CV_CAP_ANDROID_COLOR_FRAME_RGBA);

char buffer[256];

sprintf(buffer, "Display performance: %dx%d @ %.3f", drawing_frame.cols, drawing_frame.rows, fps);

cv::putText(drawing_frame, std::string(buffer), cv::Point(8,64),

cv::FONT_HERSHEY_COMPLEX_SMALL, 1, cv::Scalar(0,255,0,255));

engine_draw_frame(&engine, drawing_frame);

}

if (time_queue.size() >= 2)

then = time_queue.front();

else

then = 0;

if (time_queue.size() >= 25)

time_queue.pop();

fps = time_queue.size() * (float)cv::getTickFrequency() / (now-then);

}

}

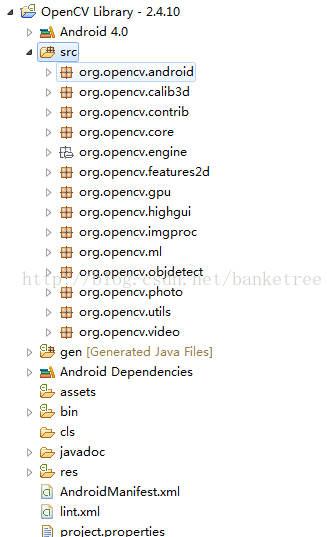

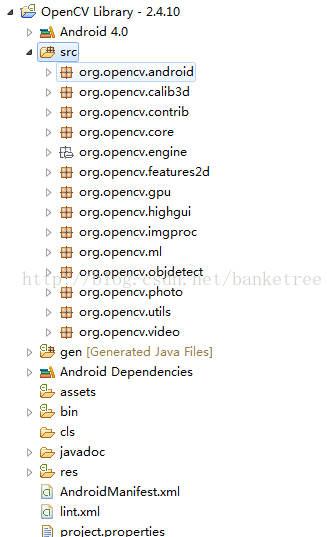

OpenCV Library - 2.4.10

该项目是OpenCV Java SDK,实现Java下操作OpenCV。

如图:

概括以下:

| 包 | 描写 |

org.opencv.android

对应:

libopencv_androidcamera.a | AsyncServiceHelper:辅助工具:绑定服务、解开服务、加载库。

BaseLoaderCallback:实现加载回调。

CameraBridgeViewBase:摄像控件基类,继承SurfaceView。

FpsMeter:帧统计。

InstallCallbackInterface:初始化回调接口。

JavaCameraView:java层实现摄像。

LoaderCallbackInterface:加载回调接口。

NativeCameraView:本地摄像控件。

OpenCVLoader:根据版本加载OpenCV库。

StaticHelper:辅助OpenCVLoader。

Utils:其他功能辅助。 |

org.opencv.calib3d

对应:

libopencv_calib3d.a | 3D实现库,还包括了BM块匹配算法类StereoBM、SGBM块匹配算法类StereoSGBM类。 |

org.opencv.contrib

对应:

libopencv_contrib.a | Contrib:

FaceRecognizer:

StereoVar: |

org.opencv.core

对应:

libopencv_core.a | 核心库 定义了图象的新容器。

Algorithm:

Core:

CvException:

CvType:

Mat:

MatOfByte:

MatOfDMatch:

MatOfFloat:

MatOfDouble:

MatOfFloat4:

MatOfFloat6:

MatOfInt:

MatOfInt4:

MatOfKeyPoint:

MatOfPoint:

MatOfPoint2f:

MatOfPoint3:

MatOfPoint3f:

MatOfRect:

Point:

Point3:

Range:

Rect:

RotatedRect:

Scalar:

Size:

TermCriteria: |

| org.opencv.engine | OpenCVEngineInterface.aidl: |

org.opencv.features2d

对应:

libopencv_features2d.a | DescriptorExtractor:

DescriptorMatcher:

FeatureDetector:

DMatch:

Features2d:

GenericDescriptorMatcher:

KeyPoint: |

org.opencv.gpu

对应:

libopencv_ocl.a | DeviceInfo:

Gpu:

TargetArchs: |

org.opencv.highgui

对应:

libopencv_highgui.a | Highgui:

VideoCapture: |

org.opencv.imgproc

对应:

libopencv_imgproc.a | CLAHE:

Imgproc:

Moments:

Subdiv2D: |

org.opencv.ml

对应:

libopencv_ml.a | CvANN_MLP_TrainParams:

CvANN_MLP:

CvBoost:

CvBoostParams:

CvDTree:

CvDTreeParams:

CvERTrees:

CvGBTrees:

CvGBTreesParams:

CvKNearest:

CvNormalBayesClassifier:

CvParamGrid:

CvRTParams:

CvRTrees:

CvStatModel:

CvSVM:

CvSVMParams:

EM:

Ml: |

org.opencv.objdetect

对应:

libopencv_objdetect.a | CascadeClassifier:

HOGDescriptor:

Objdetect: |

org.opencv.photo

对应:

libopencv_photo.a | Photo: |

| org.opencv.utils | Converters: |

org.opencv.video

对应:

libopencv_video.a

libopencv_videostab.a | BackgroundSubtractor:

BackgroundSubtractorMOG:

BackgroundSubtractorMOG2:

KalmanFilter:

Video: |

其他模块:

libopencv_flann.a //特点匹配算法实现

libopencv_legacy.a

libopencv_stitching.a //图象拼接

libopencv_superres.a

libopencv_ts.a

OpenCV.mk

#编译前 需定义 NDK_USE_CYGPATH=1

USER_LOCAL_PATH:=$(LOCAL_PATH) #路径

USER_LOCAL_C_INCLUDES:=$(LOCAL_C_INCLUDES) #定义变量

USER_LOCAL_CFLAGS:=$(LOCAL_CFLAGS)

USER_LOCAL_STATIC_LIBRARIES:=$(LOCAL_STATIC_LIBRARIES)

USER_LOCAL_SHARED_LIBRARIES:=$(LOCAL_SHARED_LIBRARIES)

USER_LOCAL_LDLIBS:=$(LOCAL_LDLIBS)

LOCAL_PATH:=$(subst ?,,$(firstword ?$(subst , ,$(subst /, ,$(call my-dir))))) #本地路径

OPENCV_TARGET_ARCH_ABI:=$(TARGET_ARCH_ABI) #目标版本

OPENCV_THIS_DIR:=$(patsubst $(LOCAL_PATH)\%,%,$(patsubst $(LOCAL_PATH)/%,%,$(call my-dir))) #本目录

OPENCV_MK_DIR:=$(dir $(lastword $(MAKEFILE_LIST))) #所有的mk目录

OPENCV_LIBS_DIR:=$(OPENCV_THIS_DIR)/libs/opencv/$(OPENCV_TARGET_ARCH_ABI) #对应库目录

OPENCV_3RDPARTY_LIBS_DIR:=$(OPENCV_THIS_DIR)/libs/3rdparty/$(OPENCV_TARGET_ARCH_ABI) #3d库目录

OPENCV_BASEDIR:= #基目录

OPENCV_LOCAL_C_INCLUDES:="$(LOCAL_PATH)/$(OPENCV_THIS_DIR)/include/opencv" "$(LOCAL_PATH)/$(OPENCV_THIS_DIR)/include" #本地包括目录

OPENCV_MODULES:=contrib legacy stitching superres ocl objdetect ml ts videostab video photo calib3d features2d highgui imgproc flann androidcamera core #对应的库文件

OPENCV_LIB_TYPE:=STATIC #静态库

OPENCV_HAVE_GPU_MODULE:=off #是不是具有gpu

OPENCV_USE_GPU_MODULE:=on #是不是开启

ifeq ($(TARGET_ARCH_ABI),armeabi-v7a) #与v7a相等

ifeq ($(OPENCV_HAVE_GPU_MODULE),on) #开启gpu

ifneq ($(CUDA_TOOLKIT_DIR),)

OPENCV_USE_GPU_MODULE:=on

endif

endif

OPENCV_DYNAMICUDA_MODULE:=

else

OPENCV_DYNAMICUDA_MODULE:=

endif

CUDA_RUNTIME_LIBS:=

ifeq ($(OPENCV_LIB_TYPE),) #未表明使用库类型则使用动态

OPENCV_LIB_TYPE:=SHARED

endif

ifeq ($(OPENCV_LIB_TYPE),SHARED)

OPENCV_LIBS:=java

OPENCV_LIB_TYPE:=SHARED

else

OPENCV_LIBS:=$(OPENCV_MODULES)

OPENCV_LIB_TYPE:=STATIC

endif

ifeq ($(OPENCV_LIB_TYPE),SHARED) #引入3D库

OPENCV_3RDPARTY_COMPONENTS:=

OPENCV_EXTRA_COMPONENTS:=

else

ifeq ($(TARGET_ARCH_ABI),armeabi-v7a)

OPENCV_3RDPARTY_COMPONENTS:=tbb libjpeg libpng libtiff libjasper IlmImf

OPENCV_EXTRA_COMPONENTS:=c log m dl z

endif

ifeq ($(TARGET_ARCH_ABI),x86)

OPENCV_3RDPARTY_COMPONENTS:=tbb libjpeg libpng libtiff libjasper IlmImf

OPENCV_EXTRA_COMPONENTS:=c log m dl z

endif

ifeq ($(TARGET_ARCH_ABI),armeabi)

OPENCV_3RDPARTY_COMPONENTS:= libjpeg libpng libtiff libjasper IlmImf

OPENCV_EXTRA_COMPONENTS:=c log m dl z

endif

ifeq ($(TARGET_ARCH_ABI),mips)

OPENCV_3RDPARTY_COMPONENTS:=tbb libjpeg libpng libtiff libjasper IlmImf

OPENCV_EXTRA_COMPONENTS:=c log m dl z

endif

endif

ifeq ($(OPENCV_CAMERA_MODULES),on) #引入相机库(只有动态库)

ifeq ($(TARGET_ARCH_ABI),armeabi)

OPENCV_CAMERA_MODULES:= native_camera_r2.2.0 native_camera_r2.3.3 native_camera_r3.0.1 native_camera_r4.0.0 native_camera_r4.0.3 native_camera_r4.1.1 native_camera_r4.2.0 native_camera_r4.3.0 native_camera_r4.4.0

endif

ifeq ($(TARGET_ARCH_ABI),armeabi-v7a)

OPENCV_CAMERA_MODULES:= native_camera_r2.2.0 native_camera_r2.3.3 native_camera_r3.0.1 native_camera_r4.0.0 native_camera_r4.0.3 native_camera_r4.1.1 native_camera_r4.2.0 native_camera_r4.3.0 native_camera_r4.4.0

endif

ifeq ($(TARGET_ARCH_ABI),x86)

OPENCV_CAMERA_MODULES:= native_camera_r2.3.3 native_camera_r3.0.1 native_camera_r4.0.3 native_camera_r4.1.1 native_camera_r4.2.0 native_camera_r4.3.0 native_camera_r4.4.0

endif

ifeq ($(TARGET_ARCH_ABI),mips)

OPENCV_CAMERA_MODULES:= native_camera_r4.0.3 native_camera_r4.1.1 native_camera_r4.2.0 native_camera_r4.3.0 native_camera_r4.4.0

endif

else

OPENCV_CAMERA_MODULES:=

endif

ifeq ($(OPENCV_LIB_TYPE),SHARED)

OPENCV_LIB_SUFFIX:=so

else

OPENCV_LIB_SUFFIX:=a

OPENCV_INSTALL_MODULES:=on

endif

define add_opencv_module #添加opencv库

include $(CLEAR_VARS)

LOCAL_MODULE:=opencv_$1

LOCAL_SRC_FILES:=$(OPENCV_LIBS_DIR)/libopencv_$1.$(OPENCV_LIB_SUFFIX)

include $(PREBUILT_$(OPENCV_LIB_TYPE)_LIBRARY)

endef

define add_cuda_module #添加cuda库

include $(CLEAR_VARS)

LOCAL_MODULE:=$1

LOCAL_SRC_FILES:=$(CUDA_TOOLKIT_DIR)/targets/armv7-linux-androideabi/lib/lib$1.so

include $(PREBUILT_SHARED_LIBRARY)

endef

define add_opencv_3rdparty_component #添加3D库

include $(CLEAR_VARS)

LOCAL_MODULE:=$1

LOCAL_SRC_FILES:=$(OPENCV_3RDPARTY_LIBS_DIR)/lib$1.a

include $(PREBUILT_STATIC_LIBRARY)

endef

define add_opencv_camera_module #添加相机库

include $(CLEAR_VARS)

LOCAL_MODULE:=$1

LOCAL_SRC_FILES:=$(OPENCV_LIBS_DIR)/lib$1.so

include $(PREBUILT_SHARED_LIBRARY)

endef

ifeq ($(OPENCV_MK_$(OPENCV_TARGET_ARCH_ABI)_ALREADY_INCLUDED),) #未包括则添加

ifeq ($(OPENCV_INSTALL_MODULES),on)

$(foreach module,$(OPENCV_LIBS),$(eval $(call add_opencv_module,$(module))))

ifneq ($(OPENCV_DYNAMICUDA_MODULE),)

ifeq ($(OPENCV_LIB_TYPE),SHARED)

$(eval $(call add_opencv_module,$(OPENCV_DYNAMICUDA_MODULE)))

endif

endif

endif

ifeq ($(OPENCV_USE_GPU_MODULE),on)

ifeq ($(INSTALL_CUDA_LIBRARIES),on)

$(foreach module,$(CUDA_RUNTIME_LIBS),$(eval $(call add_cuda_module,$(module))))

endif

endif

$(foreach module,$(OPENCV_3RDPARTY_COMPONENTS),$(eval $(call add_opencv_3rdparty_component,$(module))))

$(foreach module,$(OPENCV_CAMERA_MODULES),$(eval $(call add_opencv_camera_module,$(module))))

ifneq ($(OPENCV_BASEDIR),)

OPENCV_LOCAL_C_INCLUDES += $(foreach mod, $(OPENCV_MODULES), $(OPENCV_BASEDIR)/modules/$(mod)/include)

ifeq ($(OPENCV_USE_GPU_MODULE),on)

OPENCV_LOCAL_C_INCLUDES += $(OPENCV_BASEDIR)/modules/gpu/include

endif

endif

#turn off module installation to prevent their redefinition

OPENCV_MK_$(OPENCV_TARGET_ARCH_ABI)_ALREADY_INCLUDED:=on

endif

ifeq ($(OPENCV_MK_$(OPENCV_TARGET_ARCH_ABI)_GPU_ALREADY_INCLUDED),) #未包括则添加

ifeq ($(OPENCV_USE_GPU_MODULE),on)

include $(CLEAR_VARS)

LOCAL_MODULE:=opencv_gpu

LOCAL_SRC_FILES:=$(OPENCV_LIBS_DIR)/libopencv_gpu.a

include $(PREBUILT_STATIC_LIBRARY)

endif

OPENCV_MK_$(OPENCV_TARGET_ARCH_ABI)_GPU_ALREADY_INCLUDED:=on

endif

ifeq ($(OPENCV_LOCAL_CFLAGS),) #标识

OPENCV_LOCAL_CFLAGS := -fPIC -DANDROID -fsigned-char

endif

include $(CLEAR_VARS)

LOCAL_C_INCLUDES:=$(USER_LOCAL_C_INCLUDES) #包括路径

LOCAL_CFLAGS:=$(USER_LOCAL_CFLAGS) #标识

LOCAL_STATIC_LIBRARIES:=$(USER_LOCAL_STATIC_LIBRARIES) #静态库

LOCAL_SHARED_LIBRARIES:=$(USER_LOCAL_SHARED_LIBRARIES) #动态库

LOCAL_LDLIBS:=$(USER_LOCAL_LDLIBS) #静态目录

LOCAL_C_INCLUDES += $(OPENCV_LOCAL_C_INCLUDES) #追加包括路径

LOCAL_CFLAGS += $(OPENCV_LOCAL_CFLAGS) #追加标识

ifeq ($(OPENCV_USE_GPU_MODULE),on) #使用gpu则添加cuda路径

LOCAL_C_INCLUDES += $(CUDA_TOOLKIT_DIR)/include

endif

ifeq ($(OPENCV_INSTALL_MODULES),on) #

LOCAL_$(OPENCV_LIB_TYPE)_LIBRARIES += $(foreach mod, $(OPENCV_LIBS), opencv_$(mod))

ifeq ($(OPENCV_LIB_TYPE),SHARED)

ifneq ($(OPENCV_DYNAMICUDA_MODULE),)

LOCAL_$(OPENCV_LIB_TYPE)_LIBRARIES += $(OPENCV_DYNAMICUDA_MODULE)

endif

endif

else

LOCAL_LDLIBS += -L$(call host-path,$(LOCAL_PATH)/$(OPENCV_LIBS_DIR)) $(foreach lib, $(OPENCV_LIBS), -lopencv_$(lib)) #追加目录

endif

ifeq ($(OPENCV_LIB_TYPE),STATIC) #静态 追加3D库

LOCAL_STATIC_LIBRARIES += $(OPENCV_3RDPARTY_COMPONENTS)

endif

LOCAL_LDLIBS += $(foreach lib,$(OPENCV_EXTRA_COMPONENTS), -l$(lib)) #静态目录

ifeq ($(OPENCV_USE_GPU_MODULE),on)

ifeq ($(INSTALL_CUDA_LIBRARIES),on)

LOCAL_SHARED_LIBRARIES += $(foreach mod, $(CUDA_RUNTIME_LIBS), $(mod))

else

LOCAL_LDLIBS += -L$(CUDA_TOOLKIT_DIR)/targets/armv7-linux-androideabi/lib $(foreach lib, $(CUDA_RUNTIME_LIBS), -l$(lib))

endif

LOCAL_STATIC_LIBRARIES+=libopencv_gpu

endif

LOCAL_PATH:=$(USER_LOCAL_PATH) #恢复原路径

结束

OpenCV在Android上的利用,使图片、相机操作变得简单了,接下来掌握OpenCV直接使用技能。

生活不易,码农辛苦

如果您觉得本网站对您的学习有所帮助,可以手机扫描二维码进行捐赠