日志主要包括系统日志、利用程序日志和安全日志。系统运维和开发人员可以通过日志了解服务器软硬件信息、检查配置进程中的毛病及毛病产生的缘由。常常分析日志可以了解服务器的负荷,性能安全性,从而及时采取措施纠正毛病。

通常,日志被分散的贮存不同的装备上。如果你管理数10上百台服务器,你还在使用顺次登录每台机器的传统方法查阅日志。这样是否是感觉很繁琐和效力低下。当务之急我们使用集中化的日志管理,例如:开源的syslog,将所有服务器上的日志搜集汇总。

集中化管理日志后,日志的统计和检索又成为1件比较麻烦的事情,1般我们使用grep、awk和wc等Linux命令能实现检索和统计,但是对要求更高的查询、排序和统计等要求和庞大的机器数量仍然使用这样的方法难免有点力不从心。

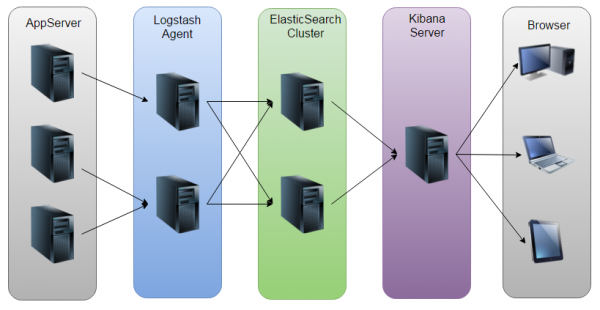

开源实光阴志分析ELK平台能够完善的解决我们上述的问题,ELK由ElasticSearch、Logstash和Kiabana3个开源工具组成。官方网站: https://www.elastic.co/products

下载linux的1.8 JDK 包,解压,进行以下配置:

vi ~/.bashrc

export JAVA_HOME=/usr/local/jdk1.8.0_101

export PATH=$PATH:$JAVA_HOME/bin

exportCLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$CLASSPATH

进入https://www.elastic.co/products,下载ElasticSearch,Logstash,Kibana linux下相干紧缩包

tar -zxvf elasticsearch⑵.4.0.tar.gz

cd elasticsearch⑵.4.0

./bin/plugin install mobz/elasticsearch-head

vi config/elasticsearch.yml

cluster.name=es_cluster

node.name=node0

path.data=/tmp/elasticsearch/data

path.logs=/tmp/elasticsearch/logs

#当前hostname或IP

network.host=192.168.245.129

network.port=9200

由于不能使用root身份启动,所以需要先创建1个用户,如:elk

adduser elk

passwd elk #输入两次密码。

chown -R elk /你的elasticsearch安装目录 #root给elk赋权限

./bin/elasticsearch

可以看到,它跟其他的节点的传输端口为9300,接受HTTP要求的端口为9200。使用ctrl+C停止。固然,也能够使用后台进程的方式启动ES:

./bin/elasticsearch &

安装的head插件,它是1个用阅读器跟ES集群交互的插件,可以查看集群状态、集群的doc内容、履行搜索和普通的Rest要求等。现在也能够使用它打开 localhost:9200/_plugin/head 页面来查看ES集群状态:

tar -zxvf logstash⑵.4.0.tar.gz

cd logstash⑵.4.0.tar.gz

mkdir config #创建放置配置文件的目录

vi config/log_to_es.conf

加入input,output配置:

input {

syslog {

codec => "json"

port => 514

}

}

output {

elasticsearch {

hosts => ["192.168.245.129:9200"]

}

}

- input:使用syslog udp方式来接收logback传输过来的日志,配置端口为514(默许就是514,可以根据需要随便指定),使用 netstat -anptu可以查看到启动的监听

tcp6 0 0 :::514 :::* LISTEN 2869/java

udp6 0 0 :::514 :::* 2869/java

./bin/logstash agent -f config/log_to_es.conf

注意:要使用root身份进行启动

tar -zxvf kibana⑷.6.1-linux-x86_64.tar.gz

cd kibana⑷.6.1-linux-x86_64

vi config/kibana.yml

server.port: 5601

server.host: 192.168.245.129

elasticsearch.url: http://192.168.245.129:9200

kibana.index: “.kibana”

./bin/kibana

用阅读器打开该地址 http://192.168.245.129:5601,为了后续使用Kibana,需要配置最少1个Index名字或Pattern,它用于在分析时肯定ElasticSearch中的Index。

使用springboot+logback,将logback的记录的日志存储到elk中。

自定义的属性类:

@Component

@ConfigurationProperties(prefix = "myproperties", ignoreUnknownFields = false)

public class MyProperties {

private final CorsConfiguration cors = new CorsConfiguration();

public CorsConfiguration getCors() {

return cors;

}

private final Logging logging = new Logging();

public Logging getLogging() { return logging; }

public static class Logging {

private final Logstash logstash = new Logstash();

public Logstash getLogstash() { return logstash; }

public static class Logstash {

private boolean enabled = false;

private String host = "localhost";

private int port = 5000;

private int queueSize = 512;

public boolean isEnabled() { return enabled; }

public void setEnabled(boolean enabled) { this.enabled = enabled; }

public String getHost() { return host; }

public void setHost(String host) { this.host = host; }

public int getPort() { return port; }

public void setPort(int port) { this.port = port; }

public int getQueueSize() { return queueSize; }

public void setQueueSize(int queueSize) { this.queueSize = queueSize; }

}

}

}

日志配置类:

@Configuration

public class LoggingConfiguration {

private final Logger log = LoggerFactory.getLogger(LoggingConfiguration.class);

private LoggerContext context = (LoggerContext) LoggerFactory.getILoggerFactory();

// @Value("${spring.application.name}")

private String appName="Example";

// @Value("${server.port}")

private String serverPort="8089";

@Autowired

private MyProperties myPro;

@PostConstruct

private void init() {

if (myPro.getLogging().getLogstash().isEnabled()) {

addLogstashAppender();

}

}

public void addLogstashAppender() {

log.info("Initializing Logstash logging");

LogstashSocketAppender logstashAppender = new LogstashSocketAppender();

logstashAppender.setName("LOGSTASH");

logstashAppender.setContext(context);

String customFields = "{\"app_name\":\"" + appName + "\",\"app_port\":\"" + serverPort + "\"}";

// Set the Logstash appender config from JHipster properties

logstashAppender.setSyslogHost(myPro.getLogging().getLogstash().getHost());

logstashAppender.setPort(myPro.getLogging().getLogstash().getPort());

logstashAppender.setCustomFields(customFields);

//添加日志功率,只有warn和error才记录到elk stack中

logstashAppender.addFilter(new Filter<ILoggingEvent>() {

@Override

public FilterReply decide(ILoggingEvent event) {

if(event.getLevel() == Level.ERROR || event.getLevel()==Level.WARN){

return FilterReply.ACCEPT;

}

return FilterReply.DENY;

}

});

// Limit the maximum length of the forwarded stacktrace so that it won't exceed the 8KB UDP limit of logstash

ShortenedThrowableConverter throwableConverter = new ShortenedThrowableConverter();

throwableConverter.setMaxLength(7500);

throwableConverter.setRootCauseFirst(true);

logstashAppender.setThrowableConverter(throwableConverter);

logstashAppender.start();

// Wrap the appender in an Async appender for performance

AsyncAppender asyncLogstashAppender = new AsyncAppender();

asyncLogstashAppender.setContext(context);

asyncLogstashAppender.setName("ASYNC_LOGSTASH");

asyncLogstashAppender.setQueueSize(myPro.getLogging().getLogstash().getQueueSize());

asyncLogstashAppender.addAppender(logstashAppender);

asyncLogstashAppender.start();

context.getLogger("ROOT").addAppender(asyncLogstashAppender);

}

}

注意:日志过滤可以通过添加logback appender的filter方式,或是采取logstash filter的drop插件,如:

filter {

if ([message] =~ "^toElk") {

}else {

drop {}

}

}

使用supervisor来管理elk stack进程。

先安装setuptools:

wget https://bootstrap.pypa.io/ez_setup.py -O - | python

再安装supervisor:

wget https://pypi.python.org/packages/80/37/964c0d53cbd328796b1aeb7abea4c0f7b0e8c7197ea9b0b9967b7d004def/supervisor⑶.3.1.tar.gz

tar zxvf supervisor⑶.3.1.tar.gz

cd supervisor⑶.3.1

python setup.py install

创建supervisor配置文件:

echo_supervisord_conf >/etc/supervisord.conf

vi /etc/supervisord.conf

取消以下的注释,并修改IP为0.0.0.0

[inet_http_server] ; inet (TCP) server disabled by default

port=0.0.0.0:9001 ; (ip_address:port specifier, *:port for all iface)

username=user ; (default is no username (open server))

password=123 ; (default is no password (open server))

vi /etc/init.d/supervisord

#! /bin/sh

PATH=/sbin:/bin:/usr/sbin:/usr/bin

PROGNAME=supervisord

DAEMON=/usr/bin/$PROGNAME

CONFIG=/etc/$PROGNAME.conf

PIDFILE=/tmp/$PROGNAME.pid

DESC="supervisord daemon"

SCRIPTNAME=/etc/init.d/$PROGNAME

# Gracefully exit if the package has been removed.

test -x $DAEMON || exit 0

start()

{

echo -n "Starting $DESC: $PROGNAME"

$DAEMON -c $CONFIG

echo "..."

}

stop()

{

echo -n "Stopping $DESC: $PROGNAME"

supervisor_pid=$(cat $PIDFILE)

kill ⑴5 $supervisor_pid

echo "..."

}

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

*)

echo "Usage: $SCRIPTNAME {start|stop|restart}" >&2

exit 1

;;

esac

exit 0

vi /usr/local/supervisor_ini.ini

加入:

[program:elasticsearch]

directory=/usr/local/elasticsearch⑵.4.0

command=/usr/local/elasticsearch⑵.4.0/bin/elasticsearch

;process_name=elasticsearch ; process_name expr (default %(program_name)s)

user=elk

numprocs=1 ; number of processes copies to start (def 1)

priority=1 ; the relative start priority (default 999)

autostart=true ; start at supervisord start (default: true)

;startsecs=1 ; # of secs prog must stay up to be running (def. 1)

startretries=3 ; max # of serial start failures when starting (default 3)

autorestart=true ; when to restart if exited after running (def: unexpected)

stopasgroup=true ; send stop signal to the UNIX process group (default false)

killasgroup=true

redirect_stderr=true

stdout_logfile=/tmp/supervisor_elasticsearch.log

[program:logstash]

directory=/usr/local/logstash⑵.4.0

command=/usr/local/logstash⑵.4.0/bin/logstash agent -f /usr/local/logstash⑵.4.0/config/to_es.conf

;process_name=logstash ; process_name expr (default %(program_name)s)

numprocs=1 ; number of processes copies to start (def 1)

priority=2 ; the relative start priority (default 999)

autostart=true ; start at supervisord start (default: true)

;startsecs=1 ; # of secs prog must stay up to be running (def. 1)

startretries=3 ; max # of serial start failures when starting (default 3)

autorestart=true ; when to restart if exited after running (def: unexpected)

;exitcodes=0,2 ; 'expected' exit codes used with autorestart (default 0,2)

;stopsignal=QUIT ; signal used to kill process (default TERM)

;stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

stopasgroup=true ; send stop signal to the UNIX process group (default false)

killasgroup=true ; SIGKILL the UNIX process group (def false)

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/tmp/supervisor_logstash.log ; stdout log path, NONE for none; default AUTO

;stdout_logfile_maxbytes=1MB ; max # logfile bytes b4 rotation (default 50MB)

;stdout_logfile_backups=10 ; # of stdout logfile backups (default 10)

;stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

;stdout_events_enabled=false ; emit events on stdout writes (default false)

;stderr_logfile=/a/path ; stderr log path, NONE for none; default AUTO

;stderr_logfile_maxbytes=1MB ; max # logfile bytes b4 rotation (default 50MB)

;stderr_logfile_backups=10 ; # of stderr logfile backups (default 10)

;environment=A="1",B="2" ; process environment additions (def no adds)

[program:kibana]

directory=/usr/local/kibana⑷.6.1-linux-x86_64

command=/usr/local/kibana⑷.6.1-linux-x86_64/bin/kibana

;process_name=kibana ; process_name expr (default %(program_name)s)

numprocs=1 ; number of processes copies to start (def 1)

priority=3 ; the relative start priority (default 999)

autostart=true ; start at supervisord start (default: true)

;startsecs=1 ; # of secs prog must stay up to be running (def. 1)

startretries=3 ; max # of serial start failures when starting (default 3)

autorestart=true ; when to restart if exited after running (def: unexpected)

redirect_stderr=true

stdout_logfile=/tmp/supervisor_kibana.log

[group:elk_stack]

programs=elasticsearch,logstash,kibana ; each refers to 'x' in [program:x] definitions

priority=1

修改/etc/supervisord.conf文件,加入以下:

[include]

files = /usr/local/supervisor_ini/*.ini

/etc/init.d/supervisor start

/etc/init.d/supervisor stop

阅读器打开:http://127.0.0.1:9001,输入配置的user/123进入管理界面